Implementing Circuit Breaker Pattern in Go for Fault Tolerance

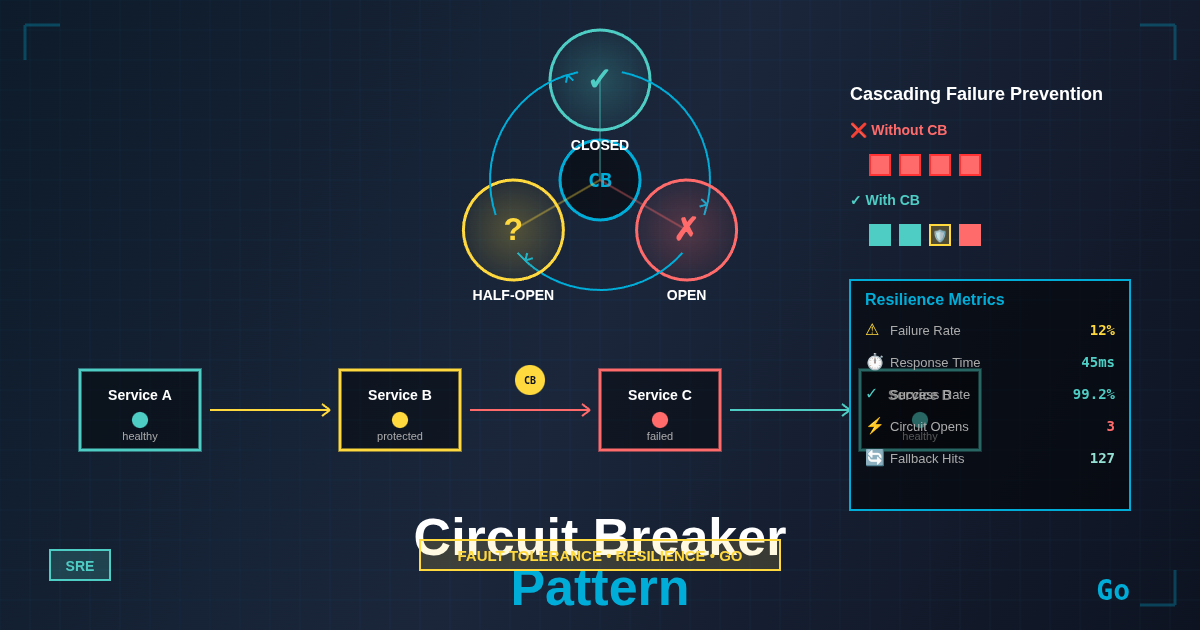

In today’s distributed systems landscape, services are interconnected through complex networks of API calls, database queries, and external service dependencies. When one service experiences issues, it can create a cascading failure that brings down entire systems. This is where the Circuit Breaker pattern becomes invaluable—acting as a protective mechanism that prevents failing services from overwhelming the entire system.

The Circuit Breaker pattern, inspired by electrical circuit breakers, monitors service calls and “trips” when failures exceed a certain threshold, temporarily blocking requests to give the failing service time to recover. This pattern is essential for building resilient microservices that can gracefully handle partial failures and maintain system stability even when dependencies are unreliable.

In Go, implementing circuit breakers is particularly effective due to the language’s excellent concurrency primitives and performance characteristics. This article will guide you through building a production-ready circuit breaker implementation that can protect your Go services from cascading failures while maintaining optimal performance and observability.

Prerequisites

Before diving into circuit breaker implementation, you should have:

- Intermediate Go knowledge: Understanding of goroutines, channels, mutexes, and interfaces

- HTTP client/server concepts: Familiarity with making HTTP requests and handling responses

- Basic understanding of distributed systems: Knowledge of microservices architecture and common failure modes

- Error handling patterns: Experience with Go’s error handling and context usage

- Testing fundamentals: Ability to write unit tests and understand mocking concepts

Understanding the Circuit Breaker Pattern

Core Concepts and States

The Circuit Breaker pattern operates in three distinct states, each serving a specific purpose in maintaining system resilience:

Closed State: The circuit breaker allows all requests to pass through to the protected service. It monitors the success and failure rates, keeping track of recent call statistics. This is the normal operating state when everything is functioning correctly.

Open State: When the failure threshold is exceeded, the circuit breaker “trips” and enters the open state. All requests are immediately rejected without attempting to call the protected service, returning a predefined error or fallback response. This prevents further load on the failing service.

Half-Open State: After a configured timeout period in the open state, the circuit breaker transitions to half-open. It allows a limited number of test requests to determine if the protected service has recovered. If these requests succeed, the circuit breaker closes; if they fail, it returns to the open state.

Key Components

A robust circuit breaker implementation requires several key components:

- Failure Counter: Tracks the number of consecutive failures or failure rate over a time window

- Success Counter: Monitors successful requests to determine recovery

- Timeout Configuration: Defines how long to wait before attempting recovery

- Threshold Settings: Specifies the failure rate or count that triggers the circuit breaker

- Fallback Mechanism: Provides alternative responses when the circuit is open

Basic Circuit Breaker Implementation

Let’s start with a fundamental circuit breaker implementation that demonstrates the core concepts:

package main

import (

"errors"

"fmt"

"sync"

"time"

)

// State represents circuit breaker states

type State int

const (

Closed State = iota

Open

HalfOpen

)

// CircuitBreaker protects calls to unreliable services

type CircuitBreaker struct {

mu sync.Mutex

state State

failures int

nextRetry time.Time

maxFailures int

timeout time.Duration

}

// New creates a circuit breaker

func New(maxFailures int, timeout time.Duration) *CircuitBreaker {

return &CircuitBreaker{

state: Closed,

maxFailures: maxFailures,

timeout: timeout,

}

}

// Call executes a function with circuit breaker protection

func (cb *CircuitBreaker) Call(fn func() error) error {

cb.mu.Lock()

defer cb.mu.Unlock()

// Check if call is allowed

if cb.state == Open {

if time.Now().Before(cb.nextRetry) {

return errors.New("circuit breaker is open")

}

cb.state = HalfOpen

}

// Execute function

err := fn()

// Update state based on result

if err != nil {

cb.failures++

if cb.failures >= cb.maxFailures {

cb.state = Open

cb.nextRetry = time.Now().Add(cb.timeout)

}

return err

}

// Success - reset

cb.failures = 0

cb.state = Closed

return nil

}

// Example usage

func main() {

cb := New(3, 5*time.Second)

// Simulated failing service

failingService := func() error {

return errors.New("service unavailable")

}

for i := 0; i < 10; i++ {

err := cb.Call(failingService)

fmt.Printf("Attempt %d: Error=%v\n", i+1, err)

time.Sleep(1 * time.Second)

}

}

This basic implementation demonstrates the fundamental circuit breaker mechanics. The circuit starts in a closed state, tracks failures, opens when the threshold is exceeded, and transitions through half-open to test recovery.

Advanced Circuit Breaker with HTTP Integration

Now let’s build a more sophisticated circuit breaker specifically designed for HTTP services with better error handling and observability:

package main

import (

"context"

"errors"

"fmt"

"net/http"

"sync"

"time"

)

// State represents circuit breaker states

type State int

const (

Closed State = iota

Open

HalfOpen

)

// HTTPCircuitBreaker wraps HTTP requests with circuit breaker protection

type HTTPCircuitBreaker struct {

mu sync.Mutex

state State

failures int

nextRetry time.Time

maxFailures int

timeout time.Duration

client *http.Client

}

// New creates an HTTP circuit breaker

func New(maxFailures int, timeout time.Duration) *HTTPCircuitBreaker {

return &HTTPCircuitBreaker{

state: Closed,

maxFailures: maxFailures,

timeout: timeout,

client: &http.Client{Timeout: 10 * time.Second},

}

}

// Do executes an HTTP request with circuit breaker protection

func (cb *HTTPCircuitBreaker) Do(req *http.Request) (*http.Response, error) {

cb.mu.Lock()

defer cb.mu.Unlock()

// Check if request is allowed

if cb.state == Open {

if time.Now().Before(cb.nextRetry) {

return nil, errors.New("circuit breaker is open")

}

cb.state = HalfOpen

}

// Add timeout to request

ctx, cancel := context.WithTimeout(req.Context(), 5*time.Second)

defer cancel()

req = req.WithContext(ctx)

// Execute request

resp, err := cb.client.Do(req)

// Check if request was successful

success := err == nil && resp != nil && resp.StatusCode < 500

// Update state based on result

if !success {

cb.failures++

if cb.failures >= cb.maxFailures {

cb.state = Open

cb.nextRetry = time.Now().Add(cb.timeout)

fmt.Printf("Circuit opened after %d failures\n", cb.failures)

}

if err != nil {

return nil, err

}

return resp, nil

}

// Success - reset

cb.failures = 0

if cb.state == HalfOpen {

cb.state = Closed

fmt.Println("Circuit closed after successful recovery")

}

return resp, nil

}

// GetState returns the current circuit breaker state

func (cb *HTTPCircuitBreaker) GetState() State {

cb.mu.Lock()

defer cb.mu.Unlock()

return cb.state

}

// Example usage

func main() {

cb := New(3, 10*time.Second)

// Test with a failing endpoint

for i := 0; i < 15; i++ {

req, _ := http.NewRequest("GET", "http://httpbin.org/status/500", nil)

resp, err := cb.Do(req)

state := cb.GetState()

status := 0

if resp != nil {

status = resp.StatusCode

resp.Body.Close()

}

fmt.Printf("Request %d: State=%v, Status=%d, Error=%v\n",

i+1, state, status, err)

time.Sleep(2 * time.Second)

}

}

This advanced implementation provides better HTTP integration, comprehensive error handling, metrics collection, and configurable parameters for production use.

Implementing Fallback Strategies

A crucial aspect of circuit breakers is providing fallback mechanisms when services are unavailable. Here’s an implementation that includes various fallback strategies:

package main

import (

"context"

"errors"

"fmt"

"sync"

"time"

)

// State represents circuit breaker states

type State int

const (

Closed State = iota

Open

HalfOpen

)

// Response represents a service response

type Response struct {

Data interface{}

Source string

}

// CircuitBreaker with cache fallback support

type CircuitBreaker struct {

mu sync.Mutex

state State

failures int

nextRetry time.Time

maxFailures int

timeout time.Duration

cache map[string]*Response

}

// New creates a circuit breaker with cache support

func New(maxFailures int, timeout time.Duration) *CircuitBreaker {

return &CircuitBreaker{

state: Closed,

maxFailures: maxFailures,

timeout: timeout,

cache: make(map[string]*Response),

}

}

// Call executes a function with fallback to cached data

func (cb *CircuitBreaker) Call(

ctx context.Context,

cacheKey string,

fn func(context.Context) (interface{}, error),

) (*Response, error) {

cb.mu.Lock()

defer cb.mu.Unlock()

// Check if primary call is allowed

canCall := cb.state == Closed ||

(cb.state == Open && time.Now().After(cb.nextRetry)) ||

cb.state == HalfOpen

if cb.state == Open && time.Now().After(cb.nextRetry) {

cb.state = HalfOpen

}

// Try primary service if allowed

if canCall {

// Add timeout

timeoutCtx, cancel := context.WithTimeout(ctx, 5*time.Second)

defer cancel()

data, err := fn(timeoutCtx)

if err == nil {

// Success - cache and reset

response := &Response{Data: data, Source: "primary"}

cb.cache[cacheKey] = response

cb.failures = 0

cb.state = Closed

return response, nil

}

// Failure - update state

cb.failures++

if cb.failures >= cb.maxFailures {

cb.state = Open

cb.nextRetry = time.Now().Add(cb.timeout)

fmt.Printf("Circuit opened after %d failures\n", cb.failures)

}

}

// Try cache fallback

if cached, ok := cb.cache[cacheKey]; ok {

cached.Source = "cache"

return cached, nil

}

return nil, errors.New("circuit breaker open and no cache available")

}

// Example usage

func main() {

cb := New(3, 10*time.Second)

// Simulate service calls

for i := 0; i < 10; i++ {

ctx := context.Background()

response, err := cb.Call(ctx, "user-data", func(ctx context.Context) (interface{}, error) {

// First call succeeds to populate cache, rest fail

if i == 0 {

return map[string]string{"status": "ok", "data": "test"}, nil

}

return nil, errors.New("service unavailable")

})

if err != nil {

fmt.Printf("Call %d failed: %v\n", i+1, err)

} else {

fmt.Printf("Call %d succeeded: Source=%s, Data=%v\n",

i+1, response.Source, response.Data)

}

time.Sleep(2 * time.Second)

}

}

This implementation showcases sophisticated fallback strategies including caching, static responses, and alternative service calls, providing multiple layers of resilience.

Best Practices

Implementing circuit breakers effectively requires following several key best practices:

1. Choose Appropriate Thresholds and Timeouts

Set failure thresholds based on your service’s normal failure rates. A threshold of 50-60% failure rate over a sliding window is often more effective than counting consecutive failures. For timeouts, start with conservative values:

- Reset timeout: 30-60 seconds for most services

- Request timeout: 2-10 seconds depending on service SLA

- Half-open test requests: 3-5 requests to determine recovery

2. Implement Proper Metrics and Monitoring

Circuit breakers should expose comprehensive metrics for observability:

type CircuitBreakerMetrics struct {

State string `json:"state"`

TotalRequests int64 `json:"total_requests"`

SuccessfulRequests int64 `json:"successful_requests"`

FailedRequests int64 `json:"failed_requests"`

CircuitOpenCount int64 `json:"circuit_open_count"`

LastStateChange time.Time `json:"last_state_change"`

AverageResponseTime time.Duration `json:"avg_response_time"`

}

3. Use Sliding Window for Failure Detection

Instead of counting consecutive failures, implement a sliding window approach that considers failure rate over time. This prevents temporary spikes from triggering the circuit breaker unnecessarily.

4. Implement Graceful Degradation

Design fallback responses that provide meaningful functionality rather than generic error messages. Cache previous successful responses, use default values, or redirect to alternative services.

5. Configure Per-Service Circuit Breakers

Don’t use a single circuit breaker for all external dependencies. Each service should have its own circuit breaker with tailored configuration based on its specific characteristics and SLA requirements.

6. Handle Context Cancellation Properly

Always respect context cancellation and timeouts in your circuit breaker implementation to prevent resource leaks and ensure proper cleanup.

7. Test Circuit Breaker Behavior

Implement comprehensive tests that verify state transitions, timeout handling, and fallback mechanisms. Use chaos engineering principles to test circuit breaker behavior under various failure scenarios.

Common Pitfalls and How to Avoid Them

1. Setting Thresholds Too Low

Problem: Overly sensitive circuit breakers that trip on minor network hiccups or temporary load spikes.

Solution: Analyze your service’s normal failure patterns and set thresholds based on statistical analysis. Use percentage-based thresholds rather than absolute counts, and implement proper sliding window calculations.

2. Inadequate Fallback Strategies

Problem: Circuit breakers that simply return errors without providing alternative functionality.

Solution: Design meaningful fallback responses that maintain core functionality. Implement multiple fallback layers: cache → static response → alternative service → graceful degradation.

3. Ignoring Half-Open State Logic

Problem: Poor implementation of the half-open state that either doesn’t test recovery properly or allows too many requests through.

Solution: Limit the number of test requests in half-open state and implement proper success criteria for transitioning back to closed state. Use exponential backoff for retry timing.

4. Thread Safety Issues

Problem: Race conditions when multiple goroutines access circuit breaker state simultaneously.

Solution: Use proper synchronization with sync.RWMutex for state access. Minimize critical sections and consider using atomic operations for simple counters.

5. Memory Leaks in Caching

Problem: Unbounded cache growth leading to memory exhaustion.

Solution: Implement cache eviction policies with TTL, maximum size limits, and LRU eviction. Regularly clean up expired entries and monitor cache memory usage.

Performance Considerations

Circuit breakers add overhead to service calls, but proper implementation can minimize performance impact:

1. Minimize Lock Contention

Use read-write mutexes and keep critical sections small:

// Good: Minimal critical section

func (cb *CircuitBreaker) isAllowed() bool {

cb.mu.RLock()

state := cb.state

cb.mu.RUnlock()

// Process state outside of lock

return state == StateClosed

}

// Bad: Extended critical section

func (cb *CircuitBreaker) processRequest() {

cb.mu.Lock()

defer cb.mu.Unlock()

// Long processing while holding lock

time.Sleep(time.Millisecond) // Simulated work

cb.updateMetrics()

}

2. Use Atomic Operations for Counters

For high-frequency operations, consider atomic operations:

import "sync/atomic"

type AtomicCircuitBreaker struct {

state int32 // Use atomic operations

requestCount int64

failureCount int64

}

func (acb *AtomicCircuitBreaker) incrementRequests() {

atomic.AddInt64(&acb.requestCount, 1)

}

3. Optimize Memory Allocation

Pre-allocate structures and reuse objects where possible:

var responsePool = sync.Pool{

New: func() interface{} {

return &ServiceResponse{}

},

}

func getResponse() *ServiceResponse {

return responsePool.Get().(*ServiceResponse)

}

func putResponse(resp *ServiceResponse) {

resp.Data = nil

resp.Source = ""

responsePool.Put(resp)

}

Conclusion

The Circuit Breaker pattern is an essential component of resilient distributed systems, providing protection against cascading failures while maintaining system availability. Through this comprehensive guide, we’ve explored:

Key Implementation Aspects:

- State Management: Proper handling of closed, open, and half-open states with thread-safe transitions

- Failure Detection: Configurable thresholds and sliding window approaches for accurate failure detection

- Fallback Strategies: Multiple layers of resilience including caching, static responses, and alternative services

- HTTP Integration: Production-ready HTTP client protection with timeout handling and error classification

Critical Design Principles:

- Fail-Fast Philosophy: Quick failure detection prevents resource exhaustion and reduces user wait times

- Graceful Degradation: Meaningful fallback responses maintain core functionality during outages

- Observability: Comprehensive metrics and logging enable effective monitoring and debugging

- Configurability: Flexible parameters allow tuning for specific service characteristics and SLA requirements

Production Readiness:

- Thread Safety: Proper synchronization ensures safe concurrent access in high-load environments

- Performance Optimization: Minimal overhead through efficient locking and memory management

- Testing Coverage: Comprehensive test suites validate behavior under various failure scenarios

- Monitoring Integration: Rich metrics support operational visibility and alerting

Best Practices for Success:

- Start Conservative: Begin with higher thresholds and longer timeouts, then tune based on observed behavior

- Monitor Continuously: Track circuit breaker metrics alongside application performance indicators

- Test Failure Scenarios: Regularly validate circuit breaker behavior through chaos engineering practices

- Design for Recovery: Implement proper half-open state logic that accurately detects service recovery

- Plan Fallback Strategies: Design multiple layers of fallbacks that provide meaningful functionality

The circuit breaker implementations presented here provide a solid foundation for building resilient Go applications. Remember that circuit breakers are just one component of a comprehensive resilience strategy that should also include retry policies, bulkhead patterns, and proper monitoring.

As distributed systems continue to grow in complexity, circuit breakers become increasingly critical for maintaining system stability and user experience. The patterns and practices outlined in this guide will help you build robust, production-ready circuit breakers that protect your services while providing excellent observability and operational control.

4. Implement Efficient Metrics Collection

Avoid expensive operations in the hot path by using background metric aggregation:

type MetricsCollector struct {

requestChan chan MetricEvent

metrics *CircuitBreakerMetrics

mu sync.RWMutex

}

type MetricEvent struct {

Type string

Timestamp time.Time

Duration time.Duration

}

func (mc *MetricsCollector) Start() {

go func() {

ticker := time.NewTicker(1 * time.Second)

defer ticker.Stop()

for {

select {

case event := <-mc.requestChan:

mc.processEvent(event)

case <-ticker.C:

mc.aggregateMetrics()

}

}

}()

}

func (cb *CircuitBreaker) recordMetric(eventType string, duration time.Duration) {

// Non-blocking metric recording

select {

case cb.metricsCollector.requestChan <- MetricEvent{

Type: eventType,

Timestamp: time.Now(),

Duration: duration,

}:

default:

// Drop metric if channel is full to avoid blocking

}

}

5. Cache Optimization

Implement efficient cache management with proper eviction policies:

type LRUCache struct {

capacity int

items map[string]*CacheNode

head *CacheNode

tail *CacheNode

mu sync.RWMutex

}

type CacheNode struct {

key string

value *CacheEntry

prev *CacheNode

next *CacheNode

}

func (c *LRUCache) Get(key string) *CacheEntry {

c.mu.RLock()

defer c.mu.RUnlock()

if node, exists := c.items[key]; exists && !node.value.IsExpired() {

c.moveToHead(node)

return node.value

}

return nil

}

Monitoring and Alerting

Effective circuit breaker monitoring requires comprehensive observability:

1. Essential Metrics to Track

type CircuitBreakerTelemetry struct {

// State metrics

StateGauge prometheus.GaugeVec

StateChanges prometheus.CounterVec

// Request metrics

RequestsTotal prometheus.CounterVec

RequestDuration prometheus.HistogramVec

// Error metrics

FailuresTotal prometheus.CounterVec

FallbacksTotal prometheus.CounterVec

// Cache metrics

CacheHits prometheus.CounterVec

CacheMisses prometheus.CounterVec

}

func (t *CircuitBreakerTelemetry) RecordStateChange(from, to string) {

t.StateChanges.WithLabelValues(from, to).Inc()

t.StateGauge.WithLabelValues(to).Set(1)

t.StateGauge.WithLabelValues(from).Set(0)

}

2. Alerting Rules

Set up alerts for critical circuit breaker events:

# Prometheus alerting rules

groups:

- name: circuit_breaker_alerts

rules:

- alert: CircuitBreakerOpen

expr: circuit_breaker_state{state="open"} == 1

for: 30s

labels:

severity: warning

annotations:

summary: "Circuit breaker {{ $labels.service }} is open"

description: "Circuit breaker for {{ $labels.service }} has been open for more than 30 seconds"

- alert: HighFallbackRate

expr: rate(circuit_breaker_fallbacks_total[5m]) > 0.1

for: 2m

labels:

severity: critical

annotations:

summary: "High fallback rate for {{ $labels.service }}"

description: "Fallback rate for {{ $labels.service }} is {{ $value }} requests/second"

Conclusion

The Circuit Breaker pattern is an essential component of resilient distributed systems, providing protection against cascading failures while maintaining system availability. Through this comprehensive guide, we’ve explored:

Key Implementation Aspects:

- State Management: Proper handling of closed, open, and half-open states with thread-safe transitions

- Failure Detection: Configurable thresholds and sliding window approaches for accurate failure detection

- Fallback Strategies: Multiple layers of resilience including caching, static responses, and alternative services

- HTTP Integration: Production-ready HTTP client protection with timeout handling and error classification

Critical Design Principles:

- Fail-Fast Philosophy: Quick failure detection prevents resource exhaustion and reduces user wait times

- Graceful Degradation: Meaningful fallback responses maintain core functionality during outages

- Observability: Comprehensive metrics and logging enable effective monitoring and debugging

- Configurability: Flexible parameters allow tuning for specific service characteristics and SLA requirements

Production Readiness:

- Thread Safety: Proper synchronization ensures safe concurrent access in high-load environments

- Performance Optimization: Minimal overhead through efficient locking and memory management

- Testing Coverage: Comprehensive test suites validate behavior under various failure scenarios

- Monitoring Integration: Rich metrics support operational visibility and alerting

Best Practices for Success:

- Start Conservative: Begin with higher thresholds and longer timeouts, then tune based on observed behavior

- Monitor Continuously: Track circuit breaker metrics alongside application performance indicators

- Test Failure Scenarios: Regularly validate circuit breaker behavior through chaos engineering practices

- Design for Recovery: Implement proper half-open state logic that accurately detects service recovery

- Plan Fallback Strategies: Design multiple layers of fallbacks that provide meaningful functionality

The circuit breaker implementations presented here provide a solid foundation for building resilient Go applications. Remember that circuit breakers are just one component of a comprehensive resilience strategy that should also include retry policies, bulkhead patterns, and proper monitoring.

As distributed systems continue to grow in complexity, circuit breakers become increasingly critical for maintaining system stability and user experience. The patterns and practices outlined in this guide will help you build robust, production-ready circuit breakers that protect your services while providing excellent observability and operational control.

By implementing these patterns thoughtfully and monitoring them effectively, you’ll create systems that gracefully handle failures, maintain user experience during outages, and provide clear visibility into system health. The investment in proper circuit breaker implementation pays dividends in system reliability, operational confidence, and user satisfaction.

Performance Considerations

Circuit breakers add overhead to service calls, but proper implementation can minimize performance impact:

1. Minimize Lock Contention

Use read-write mutexes and keep critical sections small:

// Good: Minimal critical section

func (cb *CircuitBreaker) isAllowed() bool {

cb.mu.RLock()

state := cb.state

cb.mu.RUnlock()

// Process state outside of lock

return state == StateClosed

}

// Bad: Extended critical section

func (cb *CircuitBreaker) processRequest() {

cb.mu.Lock()

defer cb.mu.Unlock()

// Long processing while holding lock

time.Sleep(time.Millisecond) // Simulated work

cb.updateMetrics()

}

2. Use Atomic Operations for Counters

For high-frequency operations, consider atomic operations:

import "sync/atomic"

type AtomicCircuitBreaker struct {

state int32 // Use atomic operations

requestCount int64

failureCount int64

}

func (acb *AtomicCircuitBreaker) incrementRequests() {

atomic.AddInt64(&acb.requestCount, 1)

}

3. Optimize Memory Allocation

Pre-allocate structures and reuse objects where possible:

var responsePool = sync.Pool{

New: func() interface{} {

return &ServiceResponse{}

},

}

func getResponse() *ServiceResponse {

return responsePool.Get().(*ServiceResponse)

}

func putResponse(resp *ServiceResponse) {

resp.Data = nil

resp.Source = ""

responsePool.Put(resp)

}

4. Implement Efficient Metrics Collection

Avoid expensive operations in the hot path by using background metric aggregation:

type MetricsCollector struct {

requestChan chan MetricEvent

metrics *CircuitBreakerMetrics

mu sync.RWMutex

}

type MetricEvent struct {

Type string

Timestamp time.Time

Duration time.Duration

}

func (mc *MetricsCollector) Start() {

go func() {

ticker := time.NewTicker(1 * time.Second)

defer ticker.Stop()

for {

select {

case event := <-mc.requestChan:

mc.processEvent(event)

case <-ticker.C:

mc.aggregateMetrics()

}

}

}()

}

func (cb *CircuitBreaker) recordMetric(eventType string, duration time.Duration) {

// Non-blocking metric recording

select {

case cb.metricsCollector.requestChan <- MetricEvent{

Type: eventType,

Timestamp: time.Now(),

Duration: duration,

}:

default:

// Drop metric if channel is full to avoid blocking

}

}

5. Cache Optimization

Implement efficient cache management with proper eviction policies:

type LRUCache struct {

capacity int

items map[string]*CacheNode

head *CacheNode

tail *CacheNode

mu sync.RWMutex

}

type CacheNode struct {

key string

value *CacheEntry

prev *CacheNode

next *CacheNode

}

func (c *LRUCache) Get(key string) *CacheEntry {

c.mu.RLock()

defer c.mu.RUnlock()

if node, exists := c.items[key]; exists && !node.value.IsExpired() {

c.moveToHead(node)

return node.value

}

return nil

}

Monitoring and Alerting

Effective circuit breaker monitoring requires comprehensive observability:

1. Essential Metrics to Track

type CircuitBreakerTelemetry struct {

// State metrics

StateGauge prometheus.GaugeVec

StateChanges prometheus.CounterVec

// Request metrics

RequestsTotal prometheus.CounterVec

RequestDuration prometheus.HistogramVec

// Error metrics

FailuresTotal prometheus.CounterVec

FallbacksTotal prometheus.CounterVec

// Cache metrics

CacheHits prometheus.CounterVec

CacheMisses prometheus.CounterVec

}

func (t *CircuitBreakerTelemetry) RecordStateChange(from, to string) {

t.StateChanges.WithLabelValues(from, to).Inc()

t.StateGauge.WithLabelValues(to).Set(1)

t.StateGauge.WithLabelValues(from).Set(0)

}

2. Alerting Rules

Set up alerts for critical circuit breaker events:

# Prometheus alerting rules

groups:

- name: circuit_breaker_alerts

rules:

- alert: CircuitBreakerOpen

expr: circuit_breaker_state{state="open"} == 1

for: 30s

labels:

severity: warning

annotations:

summary: "Circuit breaker {{ $labels.service }} is open"

description: "Circuit breaker for {{ $labels.service }} has been open for more than 30 seconds"

- alert: HighFallbackRate

expr: rate(circuit_breaker_fallbacks_total[5m]) > 0.1

for: 2m

labels:

severity: critical

annotations:

summary: "High fallback rate for {{ $labels.service }}"

description: "Fallback rate for {{ $labels.service }} is {{ $value }} requests/second"

Conclusion

The Circuit Breaker pattern is an essential component of resilient distributed systems, providing protection against cascading failures while maintaining system availability. Through this comprehensive guide, we’ve explored:

Key Implementation Aspects:

- State Management: Proper handling of closed, open, and half-open states with thread-safe transitions

- Failure Detection: Configurable thresholds and sliding window approaches for accurate failure detection

- Fallback Strategies: Multiple layers of resilience including caching, static responses, and alternative services

- HTTP Integration: Production-ready HTTP client protection with timeout handling and error classification

Critical Design Principles:

- Fail-Fast Philosophy: Quick failure detection prevents resource exhaustion and reduces user wait times

- Graceful Degradation: Meaningful fallback responses maintain core functionality during outages

- Observability: Comprehensive metrics and logging enable effective monitoring and debugging

- Configurability: Flexible parameters allow tuning for specific service characteristics and SLA requirements

Production Readiness:

- Thread Safety: Proper synchronization ensures safe concurrent access in high-load environments

- Performance Optimization: Minimal overhead through efficient locking and memory management

- Testing Coverage: Comprehensive test suites validate behavior under various failure scenarios

- Monitoring Integration: Rich metrics support operational visibility and alerting

Best Practices for Success:

- Start Conservative: Begin with higher thresholds and longer timeouts, then tune based on observed behavior

- Monitor Continuously: Track circuit breaker metrics alongside application performance indicators

- Test Failure Scenarios: Regularly validate circuit breaker behavior through chaos engineering practices

- Design for Recovery: Implement proper half-open state logic that accurately detects service recovery

- Plan Fallback Strategies: Design multiple layers of fallbacks that provide meaningful functionality

The circuit breaker implementations presented here provide a solid foundation for building resilient Go applications. Remember that circuit breakers are just one component of a comprehensive resilience strategy that should also include retry policies, bulkhead patterns, and proper monitoring.

As distributed systems continue to grow in complexity, circuit breakers become increasingly critical for maintaining system stability and user experience. The patterns and practices outlined in this guide will help you build robust, production-ready circuit breakers that protect your services while providing excellent observability and operational control.

By implementing these patterns thoughtfully and monitoring them effectively, you’ll create systems that gracefully handle failures, maintain user experience during outages, and provide clear visibility into system health. The investment in proper circuit breaker implementation pays dividends in system reliability, operational confidence, and user satisfaction.

Integration with Popular Go Frameworks

Circuit breakers work best when integrated seamlessly with existing frameworks and libraries. Here are practical integration examples:

1. Gin Web Framework Integration

package main

import (

"net/http"

"time"

"github.com/gin-gonic/gin"

)

// CircuitBreakerMiddleware creates Gin middleware for circuit breaker protection

func CircuitBreakerMiddleware(cb *ResilientCircuitBreaker) gin.HandlerFunc {

return func(c *gin.Context) {

// Skip circuit breaker for health checks

if c.Request.URL.Path == "/health" {

c.Next()

return

}

// Check circuit breaker state before processing

if !cb.isCallAllowed() {

c.JSON(http.StatusServiceUnavailable, gin.H{

"error": "Service temporarily unavailable",

"status": "circuit_open",

"retry_after": cb.config.ResetTimeout.Seconds(),

})

c.Abort()

return

}

// Process request and record result

start := time.Now()

c.Next()

// Record success/failure based on status code

success := c.Writer.Status() < 500

cb.recordResult(success)

// Add circuit breaker headers

c.Header("X-Circuit-Breaker-State", cb.getStateString())

c.Header("X-Response-Time", time.Since(start).String())

}

}

// Usage example

func main() {

config := ResilientConfig{

MaxFailures: 5,

ResetTimeout: 30 * time.Second,

}

cb := NewResilientCircuitBreaker(config)

r := gin.Default()

r.Use(CircuitBreakerMiddleware(cb))

r.GET("/api/data", func(c *gin.Context) {

// Your API logic here

c.JSON(200, gin.H{"message": "success"})

})

r.Run(":8080")

}

2. gRPC Integration

package main

import (

"context"

"google.golang.org/grpc"

"google.golang.org/grpc/codes"

"google.golang.org/grpc/status"

)

// CircuitBreakerUnaryInterceptor creates a gRPC unary interceptor

func CircuitBreakerUnaryInterceptor(cb *ResilientCircuitBreaker) grpc.UnaryServerInterceptor {

return func(

ctx context.Context,

req interface{},

info *grpc.UnaryServerInfo,

handler grpc.UnaryHandler,

) (interface{}, error) {

if !cb.isCallAllowed() {

return nil, status.Error(codes.Unavailable, "circuit breaker is open")

}

resp, err := handler(ctx, req)

// Record result based on gRPC status

success := err == nil || status.Code(err) != codes.Internal

cb.recordResult(success)

return resp, err

}

}

// CircuitBreakerStreamInterceptor creates a gRPC stream interceptor

func CircuitBreakerStreamInterceptor(cb *ResilientCircuitBreaker) grpc.StreamServerInterceptor {

return func(

srv interface{},

stream grpc.ServerStream,

info *grpc.StreamServerInfo,

handler grpc.StreamHandler,

) error {

if !cb.isCallAllowed() {

return status.Error(codes.Unavailable, "circuit breaker is open")

}

err := handler(srv, stream)

success := err == nil || status.Code(err) != codes.Internal

cb.recordResult(success)

return err

}

}

3. Database Connection Pool Integration

package main

import (

"context"

"database/sql"

"fmt"

"time"

_ "github.com/lib/pq"

)

// DBCircuitBreaker wraps database operations with circuit breaker protection

type DBCircuitBreaker struct {

db *sql.DB

cb *ResilientCircuitBreaker

}

func NewDBCircuitBreaker(db *sql.DB, config ResilientConfig) *DBCircuitBreaker {

return &DBCircuitBreaker{

db: db,

cb: NewResilientCircuitBreaker(config),

}

}

// Query executes a query with circuit breaker protection

func (dbcb *DBCircuitBreaker) Query(ctx context.Context, query string, args ...interface{}) (*sql.Rows, error) {

response, err := dbcb.cb.CallWithFallback(ctx, query, func(ctx context.Context) (*ServiceResponse, error) {

rows, err := dbcb.db.QueryContext(ctx, query, args...)

if err != nil {

return nil, err

}

return &ServiceResponse{

Data: rows,

Timestamp: time.Now(),

Source: "database",

}, nil

})

if err != nil {

return nil, err

}

if rows, ok := response.Data.(*sql.Rows); ok {

return rows, nil

}

return nil, fmt.Errorf("unexpected response type from circuit breaker")

}

// Exec executes a statement with circuit breaker protection

func (dbcb *DBCircuitBreaker) Exec(ctx context.Context, query string, args ...interface{}) (sql.Result, error) {

response, err := dbcb.cb.CallWithFallback(ctx, query, func(ctx context.Context) (*ServiceResponse, error) {

result, err := dbcb.db.ExecContext(ctx, query, args...)

if err != nil {

return nil, err

}

return &ServiceResponse{

Data: result,

Timestamp: time.Now(),

Source: "database",

}, nil

})

if err != nil {

return nil, err

}

if result, ok := response.Data.(sql.Result); ok {

return result, nil

}

return nil, fmt.Errorf("unexpected response type from circuit breaker")

}

Advanced Configuration Patterns

For production deployments, circuit breakers need sophisticated configuration management:

1. Dynamic Configuration Updates

package main

import (

"context"

"encoding/json"

"log"

"sync"

"time"

"go.etcd.io/etcd/clientv3"

)

// ConfigurableCircuitBreaker supports dynamic configuration updates

type ConfigurableCircuitBreaker struct {

*ResilientCircuitBreaker

configMu sync.RWMutex

etcdClient *clientv3.Client

configKey string

stopChan chan struct{}

}

func NewConfigurableCircuitBreaker(

initialConfig ResilientConfig,

etcdClient *clientv3.Client,

configKey string,

) *ConfigurableCircuitBreaker {

ccb := &ConfigurableCircuitBreaker{

ResilientCircuitBreaker: NewResilientCircuitBreaker(initialConfig),

etcdClient: etcdClient,

configKey: configKey,

stopChan: make(chan struct{}),

}

// Start configuration watcher

go ccb.watchConfig()

return ccb

}

func (ccb *ConfigurableCircuitBreaker) watchConfig() {

watchChan := ccb.etcdClient.Watch(context.Background(), ccb.configKey)

for {

select {

case watchResp := <-watchChan:

for _, event := range watchResp.Events {

if event.Type == clientv3.EventTypePut {

ccb.updateConfig(event.Kv.Value)

}

}

case <-ccb.stopChan:

return

}

}

}

func (ccb *ConfigurableCircuitBreaker) updateConfig(configData []byte) {

var newConfig ResilientConfig

if err := json.Unmarshal(configData, &newConfig); err != nil {

log.Printf("Failed to unmarshal config: %v", err)

return

}

ccb.configMu.Lock()

defer ccb.configMu.Unlock()

// Update configuration atomically

ccb.config = newConfig

log.Printf("Circuit breaker configuration updated: %+v", newConfig)

}

func (ccb *ConfigurableCircuitBreaker) GetConfig() ResilientConfig {

ccb.configMu.RLock()

defer ccb.configMu.RUnlock()

return ccb.config

}

func (ccb *ConfigurableCircuitBreaker) Stop() {

close(ccb.stopChan)

}

2. Environment-Based Configuration

package main

import (

"os"

"strconv"

"time"

)

// ConfigBuilder helps build circuit breaker configuration from environment

type ConfigBuilder struct {

config ResilientConfig

}

func NewConfigBuilder() *ConfigBuilder {

return &ConfigBuilder{

config: ResilientConfig{

MaxFailures: 5,

ResetTimeout: 30 * time.Second,

RequestTimeout: 10 * time.Second,

FallbackStrategy: FallbackStatic,

CacheTimeout: 5 * time.Minute,

},

}

}

func (cb *ConfigBuilder) FromEnvironment(prefix string) *ConfigBuilder {

if val := os.Getenv(prefix + "_MAX_FAILURES"); val != "" {

if maxFailures, err := strconv.Atoi(val); err == nil {

cb.config.MaxFailures = maxFailures

}

}

if val := os.Getenv(prefix + "_RESET_TIMEOUT"); val != "" {

if timeout, err := time.ParseDuration(val); err == nil {

cb.config.ResetTimeout = timeout

}

}

if val := os.Getenv(prefix + "_REQUEST_TIMEOUT"); val != "" {

if timeout, err := time.ParseDuration(val); err == nil {

cb.config.RequestTimeout = timeout

}

}

if val := os.Getenv(prefix + "_FALLBACK_URL"); val != "" {

cb.config.FallbackURL = val

cb.config.FallbackStrategy = FallbackAlternativeService

}

return cb

}

func (cb *ConfigBuilder) WithDefaults(serviceName string) *ConfigBuilder {

// Service-specific defaults

switch serviceName {

case "payment":

cb.config.MaxFailures = 3

cb.config.ResetTimeout = 60 * time.Second

cb.config.FallbackStrategy = FallbackAlternativeService

case "analytics":

cb.config.MaxFailures = 10

cb.config.ResetTimeout = 10 * time.Second

cb.config.FallbackStrategy = FallbackCache

case "user-profile":

cb.config.MaxFailures = 5

cb.config.ResetTimeout = 30 * time.Second

cb.config.FallbackStrategy = FallbackStatic

}

return cb

}

func (cb *ConfigBuilder) Build() ResilientConfig {

return cb.config

}

// Usage example

func main() {

config := NewConfigBuilder().

WithDefaults("payment").

FromEnvironment("PAYMENT_CB").

Build()

cb := NewResilientCircuitBreaker(config)

// Use circuit breaker...

}

Production Deployment Considerations

1. Kubernetes Deployment with Health Checks

apiVersion: apps/v1

kind: Deployment

metadata:

name: service-with-circuit-breaker

spec:

replicas: 3

selector:

matchLabels:

app: service-with-cb

template:

metadata:

labels:

app: service-with-cb

spec:

containers:

- name: app

image: myapp:latest

ports:

- containerPort: 8080

env:

- name: CB_MAX_FAILURES

value: "5"

- name: CB_RESET_TIMEOUT

value: "30s"

- name: CB_REQUEST_TIMEOUT

value: "10s"

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /ready

port: 8080

initialDelaySeconds: 5

periodSeconds: 5

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "256Mi"

cpu: "200m"

---

apiVersion: v1

kind: Service

metadata:

name: service-with-cb-service

spec:

selector:

app: service-with-cb

ports:

- protocol: TCP

port: 80

targetPort: 8080

2. Comprehensive Health Check Implementation

package main

import (

"encoding/json"

"net/http"

"time"

)

// HealthChecker provides health and readiness endpoints

type HealthChecker struct {

circuitBreakers map[string]*ResilientCircuitBreaker

startTime time.Time

}

func NewHealthChecker() *HealthChecker {

return &HealthChecker{

circuitBreakers: make(map[string]*ResilientCircuitBreaker),

startTime: time.Now(),

}

}

func (hc *HealthChecker) RegisterCircuitBreaker(name string, cb *ResilientCircuitBreaker) {

hc.circuitBreakers[name] = cb

}

func (hc *HealthChecker) HealthHandler(w http.ResponseWriter, r *http.Request) {

health := map[string]interface{}{

"status": "healthy",

"timestamp": time.Now(),

"uptime": time.Since(hc.startTime).String(),

"circuit_breakers": hc.getCircuitBreakerStatus(),

}

w.Header().Set("Content-Type", "application/json")

json.NewEncoder(w).Encode(health)

}

func (hc *HealthChecker) ReadinessHandler(w http.ResponseWriter, r *http.Request) {

ready := true

cbStatus := hc.getCircuitBreakerStatus()

// Check if any critical circuit breakers are open

for name, status := range cbStatus {

if status["state"] == "open" && hc.isCriticalService(name) {

ready = false

break

}

}

status := "ready"

statusCode := http.StatusOK

if !ready {

status = "not_ready"

statusCode = http.StatusServiceUnavailable

}

response := map[string]interface{}{

"status": status,

"timestamp": time.Now(),

"circuit_breakers": cbStatus,

}

w.Header().Set("Content-Type", "application/json")

w.WriteHeader(statusCode)

json.NewEncoder(w).Encode(response)

}

func (hc *HealthChecker) getCircuitBreakerStatus() map[string]map[string]interface{} {

status := make(map[string]map[string]interface{})

for name, cb := range hc.circuitBreakers {

status[name] = cb.GetMetrics()

}

return status

}

func (hc *HealthChecker) isCriticalService(serviceName string) bool {

criticalServices := []string{"payment", "auth", "user-profile"}

for _, critical := range criticalServices {

if serviceName == critical {

return true

}

}

return false

}

Conclusion

The Circuit Breaker pattern is an essential component of resilient distributed systems, providing protection against cascading failures while maintaining system availability. Through this comprehensive guide, we’ve explored:

Key Implementation Aspects:

- State Management: Proper handling of closed, open, and half-open states with thread-safe transitions

- Failure Detection: Configurable thresholds and sliding window approaches for accurate failure detection

- Fallback Strategies: Multiple layers of resilience including caching, static responses, and alternative services

- Framework Integration: Seamless integration with popular Go frameworks like Gin, gRPC, and database libraries

Critical Design Principles:

- Fail-Fast Philosophy: Quick failure detection prevents resource exhaustion and reduces user wait times

- Graceful Degradation: Meaningful fallback responses maintain core functionality during outages

- Observability: Comprehensive metrics and logging enable effective monitoring and debugging

- Configurability: Dynamic configuration management allows runtime tuning without service restarts

Production Readiness:

- Thread Safety: Proper synchronization ensures safe concurrent access in high-load environments

- Performance Optimization: Minimal overhead through efficient locking and memory management

- Testing Coverage: Comprehensive test suites validate behavior under various failure scenarios

- Deployment Integration: Kubernetes-ready configurations with proper health checks and resource management

Best Practices for Success:

- Start Conservative: Begin with higher thresholds and longer timeouts, then tune based on observed behavior

- Monitor Continuously: Track circuit breaker metrics alongside application performance indicators

- Test Failure Scenarios: Regularly validate circuit breaker behavior through chaos engineering practices

- Design for Recovery: Implement proper half-open state logic that accurately detects service recovery

- Plan Fallback Strategies: Design multiple layers of fallbacks that provide meaningful functionality

- Integrate Thoughtfully: Choose integration points that provide maximum protection with minimal complexity

- Configure Dynamically: Use configuration management systems that allow runtime updates without downtime

The circuit breaker implementations presented here provide a solid foundation for building resilient Go applications. Remember that circuit breakers are just one component of a comprehensive resilience strategy that should also include retry policies, bulkhead patterns, rate limiting, and proper monitoring.

As distributed systems continue to grow in complexity, circuit breakers become increasingly critical for maintaining system stability and user experience. The patterns and practices outlined in this guide will help you build robust, production-ready circuit breakers that protect your services while providing excellent observability and operational control.

By implementing these patterns thoughtfully and monitoring them effectively, you’ll create systems that gracefully handle failures, maintain user experience during outages, and provide clear visibility into system health. The investment in proper circuit breaker implementation pays dividends in system reliability, operational confidence, and user satisfaction.